9. DSpotter

DSpotter, as mentioned previously, is a library providing functionality for local voice & command recognition. Main characteristics for this solution are:

High noise robustness

Low resource requirement

Global language support

Easy command customization

High portability

9.1. Creating a Model

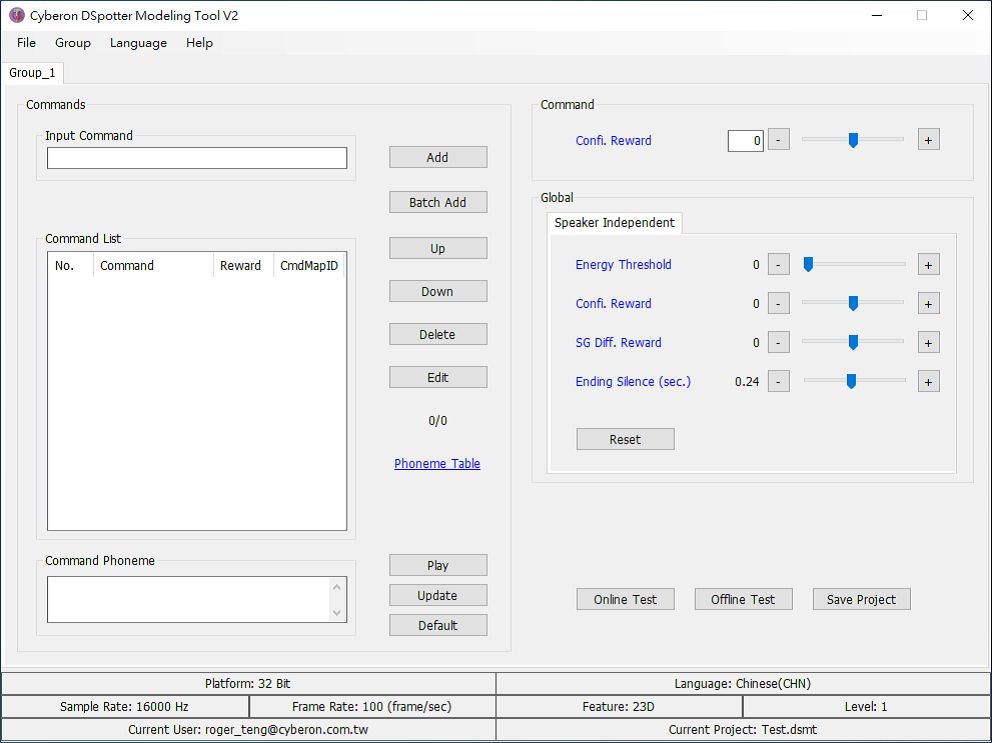

The user can create customized trigger/ commands only using text input. This can be achieved using the DSMT (DSpotter Modeling Tool). The tool is a windows-based application:

Figure 12 Cyberon DSpotter Modeling Tool

The DSMT application allows:

Creating customized trigger/commands by text input

Online test: streaming audio input

Offline test: pre-recorded wave files input

Parameters adjustment for performance optimization

Providing details about the operation of this utility is out of scope of this document. More information is available on the Renesas - DSpotter Documentation.

9.2. Update the project with a new Model

A Model holds all information for a voice recognition algorithm. The process of updating the Model in a project will be described in this section. The Model for this application example holds a wake-up and command word. This is considered as a two-stage command recognition. The voice commands are:

Hello Renesas, Lights on.

Hello Renesas, Lights off.

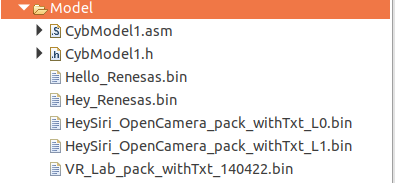

Figure 13 Model directory

DSMT produces files with a format of “_pack_withTxt.bin”. The generated file must be placed inside the “Model” directory as shown in the figure above. To activate the Model the user needs to update the contents inside CybModel1.asm. This file contains the path and name of the Model to be used.

The project must be built as DA1470x-00-Debug_OQSPI. In the “periph_setup.h” file #define ENABLE_DSPOTTER_LIB needs to be defined to enable the voice recognition functionality.

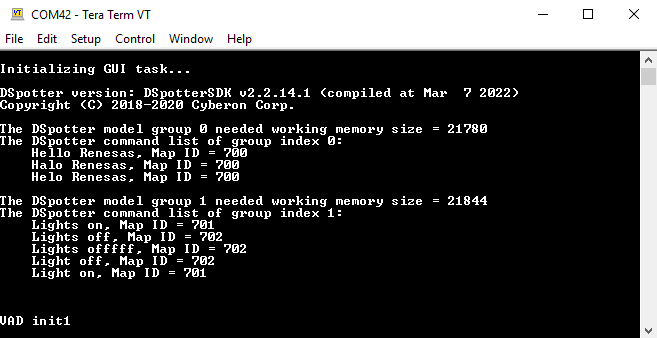

During program execution the PrintGroupCommandList() function gets the current active Model as input and prints out all staged commands. Above is an example showing the debug messages during startup.

Figure 14 Spotter debug output

As seen in the figure the model has 2 groups. The first group is the wakeup word and the second is the command word.

9.3. DSpotter API flow chart

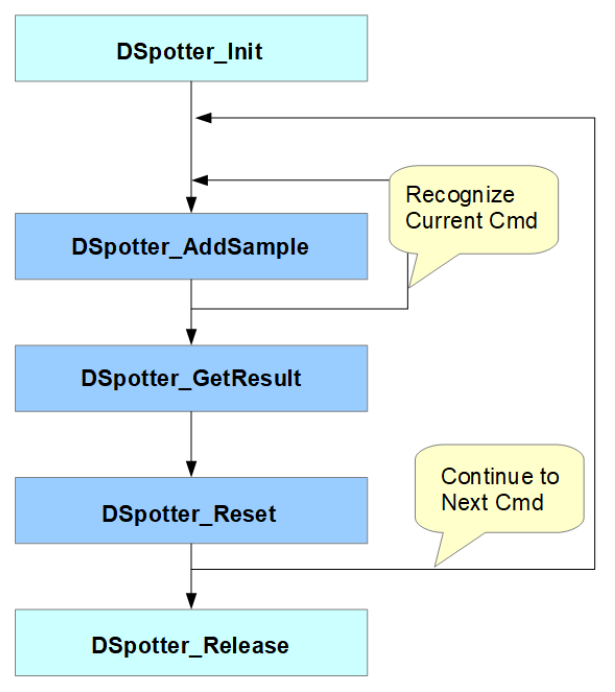

On program startup the system is set in sleep mode to minimize power consumption. When voice activity is detected the VAD will produce an interrupt and wake up the system. The audio manager is triggered, and audio samples are sent to DSpotter algorithm. The algorithm flow chart is presented in the figure below.

Figure 15 DSpotter flowchart

DSpotter_Init(), will create a recognizer for recognizing groups of commands.

DSpotter_AddSample(), will receive the voice samples and perform recognition. Application should call this function repetitively to add recorded PCM raw data into the recognizer for recognition to proceed until a recognition is found, at which moment this function returns DSPOTTER_SUCCESS, and the application can then call DSpotter_GetResult() to retrieve the recognized result. The recommended length of the input array of samples is 480 samples (= 960 bytes).

DSpotter_GetResult(), returns the recognition results, zero-based command ID when success or a negative error code otherwise. According to the returned command ID user can perform different actions. In this example a light is turned ON or OFF.

DSpotter_Reset(), resets the algorithm and initializes the system to accept the next command if available. In this example upon successful recognition of “Hello Renesas” the algorithm is setup to recognize “Lights-ON” or “Lights-OFF”.

DSpotter_Release(), will release the recognizer.